Government releases guidelines for social media under-16s ban

Kimberly Grabham

20 September 2025, 8:00 PM

The Federal Government has unveiled regulatory guidelines for its landmark social media ban affecting children under 16, with platforms given clear expectations but flexible implementation methods when the legislation takes effect in December.

Under the new framework released Tuesday, social media companies will not face mandatory universal age verification requirements. However, they must demonstrate comprehensive efforts to identify and remove underage accounts from their platforms.

Communications Minister Anika Wells emphasised that platforms have been given ample preparation time, with companies now having had 12 months to develop compliance strategies since the legislation was announced.

"They have no excuse not to be ready," Ms Wells stated during the guidelines release on 16 September.

The government has adopted what officials describe as a "lighter approach" to age verification, prioritising data minimisation while ensuring robust child protection measures.

Social media companies must satisfy the eSafety Commissioner that they have implemented "reasonable steps" to prevent children from accessing their services. This includes establishing effective systems to detect underage users, deactivate their accounts, and prevent re-registration attempts.

Ms Wells highlighted the irony that platforms already utilise sophisticated age assurance technology for commercial purposes.

"Age assurance technology is used increasingly and prolifically among social media platforms for other purposes, predominantly commercial purposes, to protect their own interests," she said.

"There is no excuse for them not to use that same technology to protect Australian kids online."

The minister used a maritime metaphor to describe the government's regulatory approach: "We cannot control the ocean, but we can police the sharks, and today we're making clear to the rest of the world how we can do this."

eSafety Commissioner Julie Inman Grant acknowledged that most platforms will require time to reconfigure their systems and implement new processes, meaning immediate full compliance is unlikely.

The Commissioner has called for public assistance in identifying non-compliant platforms, with complaints to be triaged and directed to appropriate companies.

"That is why we have asked platforms to make discoverable and responsible reporting tools available because we know people will be missed," Ms Inman Grant explained.

"If we detect that there is a really egregious oversight, or too much is being missed, then we will talk to the companies about the need to retune their technologies."

The guidelines establish six core requirements for social media platforms:

• Implementation of careful account detection and removal processes with clear user communication

• Prevention of account re-creation by users whose profiles have been deactivated

• Development of layered age verification approaches that minimise user inconvenience and error rates

• Establishment of accessible appeal mechanisms for users incorrectly identified as underage

• Moving beyond simple self-declaration methods, which alone are insufficient for legal compliance

• Continuous system monitoring and improvement with transparent public reporting on effectiveness

Importantly, the legislation explicitly prohibits platforms from requiring government identification documents for age verification, though companies may offer this as one option within a broader verification framework.

The eSafety Commissioner has deliberately avoided prescribing specific technologies, allowing platforms flexibility in their compliance approaches. Companies will not be required to retain personal data collected during age verification processes.

Ms Inman Grant acknowledged the significant impact the ban will have on young people, noting that while many children and parents welcome the changes, the transition will present challenges.

"We also note this is going to be a monumental event for a lot of children," she said.

"But we know this will be difficult for kids and so we have also released today our commitment to protecting and upholding children's digital rights and recognising that they, their parents and educators, will continue to need education and resources to prepare them for this moment and that is precisely what we are prepared to do."

The government's approach reflects a balance between protecting children online and maintaining user privacy, with platforms now responsible for developing effective systems within the regulatory framework provided.

NEWS

SPORT

RURAL

COMMUNITY

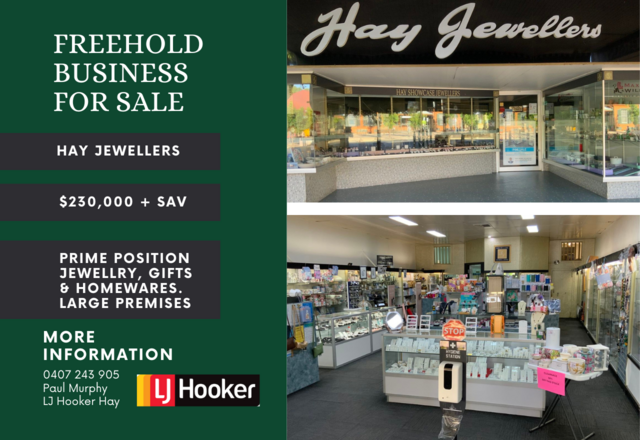

FOR SALE

COMMERCIAL PROPERTY